1. Natural Language Processing Techniques

Natural Language Processing (NLP) is a subfield of computer science and artificial intelligence that focuses on the interaction between human language and computers. NLP techniques are used to enable computers to understand, interpret, and generate human language. The following are some common NLP techniques:

- Tokenization: It involves splitting a text into individual words or tokens, which can be further analyzed.

- Part-of-speech (POS) tagging: It is the process of assigning a part of speech (such as noun, verb, adjective, etc.) to each word in a text.

- Named Entity Recognition (NER): It involves identifying and categorizing named entities such as people, organizations, locations, and dates in a text.

- Sentiment Analysis: It is the process of determining the emotional tone or sentiment of a text, typically classified as positive, negative or neutral.

- Dependency Parsing: It is the process of analyzing the grammatical structure of a sentence to determine the relationships between its components.

- Machine Translation: It is the process of translating a text from one language to another.

- Text Summarization: It is the process of generating a brief summary of a longer text.

2. Natural Language Processing Fields

Natural Language Processing (NLP) is a vast field that encompasses several subfields. Some of the prominent fields in NLP are:

- Text Classification: It involves categorizing the text into predefined categories based on their content, such as spam detection, sentiment analysis, topic classification, and document classification.

- Named Entity Recognition (NER): It involves identifying the named entities in a text, such as people, organizations, and places.

- Machine Translation: It involves translating text from one language to another language.

- Question Answering: It involves answering questions posed in natural language, such as in chatbots, search engines, and virtual assistants.

- Speech Recognition: It involves converting spoken language into text, such as in virtual assistants and speech-to-text [STT] applications .

- Text-to-Speech [TTS] Conversion: It involves converting text into speech, such as in speech synthesis and virtual assistants.

- Sentiment Analysis: It involves analyzing the emotional tone of a text as positive, negative, or neutral.

- Natural Language Generation: It involves generating text from structured data, such as in automated report generation.

3. Speech Synthesizer and Recognizer [TTS and STT] Application

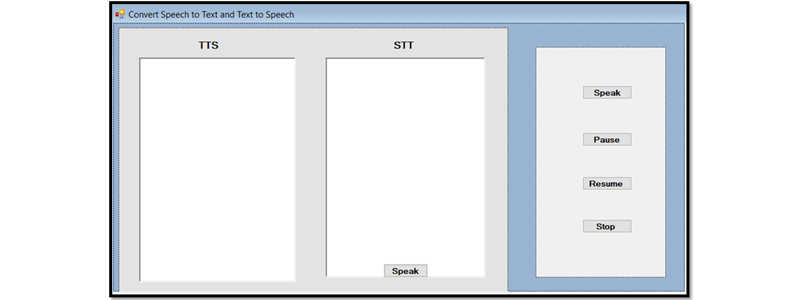

In Visual Studio, the following windows form can be created:

Figure1. Speech Synthesizer and Recognizer [TTS and STT] Application

Import the System.Speech.Synthesis and System.Speech.Recognition namespaces, which contain classes for speech synthesis and recognition, respectively. These classes allow you to create speech synthesizers and speech recognizers in your C# code.

using System.Speech.Synthesis;

using System.Speech.Recognition;

using static System.Windows.Forms.VisualStyles.VisualStyleElement;

Create a new instance of the SpeechSynthesizer class and assigns it to the variable g, as the following

SpeechSynthesizer g = new SpeechSynthesizer();

Define an event handler method named recognizer_SpeechRecognized. This method is called when the SpeechRecognitionEngine object raises the SpeechRecognized event, which occurs when speech is recognized by the engine.

The method takes two parameters: sender, which is the object that raised the event (in this case, the SpeechRecognitionEngine object), and e, which is an instance of the SpeechRecognizedEventArgs class. This class contains information about the recognized speech, such as the text that was recognized and the confidence level of the recognition. In the body of the method, the recognized text is appended to the Text property of a RichTextBox control named richTextBox1, along with a newline character (\r\n). This allows the recognized text to be displayed in the control.

private void recognizer_SpeechRecognized(object sender, SpeechRecognizedEventArgs e) {

richTextBox1.Text += e.Result.Text + "\r\n"; }

1. Build Speak Button

Check if the Text property of a RichTextBox control named richTextBox1 is not empty. If it's not empty, it creates a new instance of the SpeechSynthesizer class and uses it to synthesize and play the text in the Text property of the richTextBox1 control.

private void button1_Click(object sender, EventArgs e)

{

if (richTextBox1.Text != "")

{

g.Dispose();

g = new SpeechSynthesizer();

g.SpeakAsync(richTextBox1.Text);

}

else { MessageBox.Show("Enter some Text"); }

}

2. Build Pause Button

Check if the State property of a SpeechSynthesizer object (g) is equal to SynthesizerState.Speaking. If the SpeechSynthesizer object is currently speaking (i.e., it's in the middle of synthesizing and playing speech), the Pause method is called on the SpeechSynthesizer object to pause the speech. The Pause method pauses the speech synthesis and playback but doesn't release any resources used by the SpeechSynthesizer object.

private void button2_Click(object sender, EventArgs e)

{

if (g.State == SynthesizerState.Speaking)

{

g.Pause();

} }

3. Build Resume Button

Check if the SpeechSynthesizer object g is not null. If g is not null, it then checks if the State property of the SpeechSynthesizer object is equal to SynthesizerState.Paused. If the SpeechSynthesizer object is paused, the Resume method is called on the SpeechSynthesizer object to resume speech synthesis and playback.

private void button3_Click(object sender, EventArgs e)

{

if (g != null) {

if (g.State == SynthesizerState.Paused)

{ g.Resume();

}

} }

4. Build Stop Button

Check if the SpeechSynthesizer object g is not null. If g is not null, it disposes of the SpeechSynthesizer object by calling the Dispose method. The Dispose method releases any resources used by the SpeechSynthesizer object, such as memory, file handles, or audio resources. It's important to dispose of any object that implements the IDisposable interface (such as SpeechSynthesizer) when you're done using it, in order to avoid memory leaks and other issues.

private void button4_Click(object sender, EventArgs e) {

if (g!= null) ;

{

g.Dispose(); }

} } }

5. Build Speak Button

Initialize a new SpeechRecognitionEngine object (recognizer) and set its input to the default audio device using the SetInputToDefaultAudioDevice method. Next, it creates a DictationGrammar object and assigns it to the Grammar variable grammar. A DictationGrammar represents a grammar that can recognize free-form speech input in any language supported by the speech recognition engine. Then, the LoadGrammar method is called on the SpeechRecognitionEngine object, passing in the grammar object as an argument. This method loads the specified grammar into the speech recognition engine. Next, an event handler for the SpeechRecognized event is attached to the SpeechRecognitionEngine object using the += operator. The SpeechRecognized event is raised when the speech recognition engine recognizes speech input.

private void button5_Click(object sender, EventArgs e)

{

SpeechRecognitionEngine recognizer = new SpeechRecognitionEngine();

recognizer.SetInputToDefaultAudioDevice();

Grammar grammar = new DictationGrammar();

recognizer.LoadGrammar(grammar);

recognizer.SpeechRecognized += new EventHandler<SpeechRecognizedEventArgs>(recognizer_SpeechRecognized);

recognizer.RecognizeAsync(RecognizeMode.Multiple);

}

About the Author

Samar Mouti, Associate Professor in Information Technology. She is the Head of the Information Technology Department at Khawarizmi International College, Abu Dhabi/Al Ain, United Arab Emirates. Her research interest includes Artificial Intelligence (AI) and its application. She participated in around 68 events and academic activities, and she got about 32 Professional Development Certificates. She is an editorial board member in many international journals.